I have about 6TB of climate data to manage, and more on the way. Besides a decent array of hard drives and a clever backup strategy, what tools can I use to help maintain these data in a useful way? They’re in NetCDF files, which is a decent (if non-user-friendly) way to maintain multidimensional data (latitude, longitude, time).

We’re mostly interested in summaries of these data right now (CLIMDEX, BIOCLIM, and custom statistics), and once these are calculated the raw data themselves will not be accessed frequently. But infrequently is not the same as never, so I can’t just put them on a spare hard drive in a drawer.

What are the compression options available to me, and what is the trade-off between speed and size for the NetCDF files I’m working with?

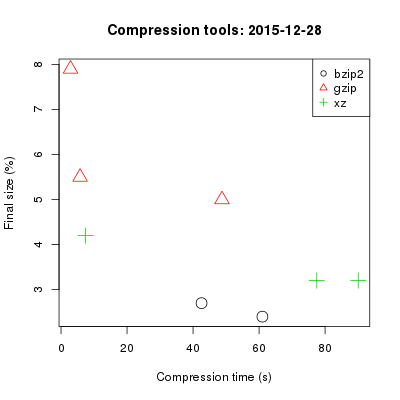

There are three major file compression tools on linux, the venerable gzip, bzip2, and the newer xz. I tried them out on a 285MB NetCDF file, one of the very many I’m working with. I included most compression (-9) and fastest (-1) options for each of the three tools, plus the default (-6) for gzip and xz. bzip2 doesn’t have the same range of options, just best (the default) and fast.

There wasn’t a huge difference in compression for this type of file, with the best (bzip2 -best) resulting in 2.4% of the original, and the worst (gzip -1) in 7.9% of the original size.

Speed, though: anywhere from 2.9 to 90.0 seconds to compress a single file. Uncompression time was about 1.6 seconds for gzip and xz regardless of option, and 3.2-3.6 for bzip2.

For this file format, xz was useless: slow and not the most effective. bzip2 produced the smallest files, but not by a huge amount. gzip was fastest, but produced the largest files even on the best mode.

This matches what I expected, but the specifics are useful:

- Using bzip2 -best I could get one set of files from 167GB to 4GB, but it would take 9.5 hours to do so.

- Using gzip -1 I could get that set of files down to 13GB, and it would only take 24 minutes.

I think that’s a fair trade-off. The extra 9 hours is more important to me than the extra 9GB, and accessing a single file in 1.5 seconds instead of 3.5 also improves usability on the occasions when we need to access the raw data.